#SHAREX BUFFER SIZE SOFTWARE#

I think the GPU is utilised but I guess it's still Software Encoding according to you. Quote from: Jiehfeng on November 28, 2020, 04:02:59 PMI chose CUDA acceleration when I first started the project, but when exporting, selecting HEVC has it locked to "Software Encoding" and I can't change it. axes )) mask = map ( lambda x : True if x. set_title ( "Subject %s " % "AB" ) cb = plt. T, aspect = 'auto', interpolation = 'None', vmin =- max, vmax = max ) axes.

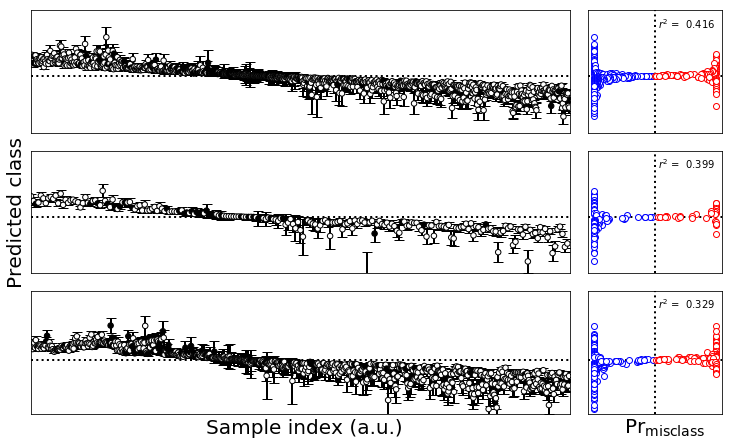

This is equivalent to reordering the classidices and calculating r2 r2 *= - 1 max = np. calculate_signed_r_square ( epo ) # switch the sign to make the plot more consistent with the timecourse.

subplots ( 2, 1, sharex = True, sharey = True ) for i in range ( 2 ): r2 = proc. ax_colorbar ( vmin, vmax, label = 'voltage ', ticks = )įig, axes = plt. subplot2grid (( n_classes, scale * n_ivals + 1 ), ( 0, scale * n_ivals ), rowspan = n_classes ) plot. text ( - 1.5, 0, , color = 'bm', rotation = 'vertical', verticalalignment = 'center' ) # colorbar ax = plt. text ( 0, - 1.5, ivals, horizontalalignment = 'center' ) if ival_idx = 0 : ax. axes, vmin = vmin, vmax = vmax ) if class_idx = 1 : ax. subplot2grid (( n_classes, scale * n_ivals + 1 ), ( class_idx, scale * ival_idx ), colspan = scale ) plot. max () vmax = round ( vmax ) vmin = - vmax for ival_idx in range ( n_ivals ): ax = plt. shape n_ivals = len ( ivals ) for class_idx in range ( n_classes ): vmax = np. jumping_means ( epo, ivals ) n_classes = epo. count_nonzero ( a = b ) / len ( a ) print 'Accuracy: %.1f%% ' % ( accuracy * 100 ) acc += accuracy print print 'Overal accuracy: %.1f%% ' % ( 100 * acc / 2 )ĭef plot_scalps ( epo, ivals ): # ratio scalp to colorbar width scale = 10 dat = proc. upper () print 'True labels : %s ' % labels a = np. reshape ( 100, - 1 ) text = '' for i in range ( 100 ): row = rows - 6 col = cols letter = MATRIX text += letter print print 'Result for subject %d ' % ( subject + 1 ) print 'Constructed labels: %s ' % text. sum ( axis = 1 ) # lda_out_prob = lda_out_prob. reshape ( 100, 15, 12 ) lda_out_prob = lda_out_prob, static_idx, unscramble_idx ] #lda_out_prob = lda_out_prob # destil the result of the 15 runs #lda_out_prob = lda_out_prob.prod(axis=1) lda_out_prob = lda_out_prob. lda_apply ( fv_test, cfy ) # unscramble the order of stimuli unscramble_idx = fv_test. lda_train ( fv_train ) # load the testing set dat = load_bcicomp3_ds2 ( testing_set ) fv_test, _ = preprocessing ( dat, MARKER_DEF_TEST, jumping_means_ivals ) # predict lda_out_prob = proc. Epo = acc = 0 for subject in range ( 2 ): if subject = 0 : training_set = TRAIN_A testing_set = TEST_A labels = TRUE_LABELS_A jumping_means_ivals = JUMPING_MEANS_IVALS_A else : training_set = TRAIN_B testing_set = TEST_B labels = TRUE_LABELS_B jumping_means_ivals = JUMPING_MEANS_IVALS_B # load the training set dat = load_bcicomp3_ds2 ( training_set ) fv_train, epo = preprocessing ( dat, MARKER_DEF_TRAIN, jumping_means_ivals ) # train the lda cfy = proc.

0 kommentar(er)

0 kommentar(er)